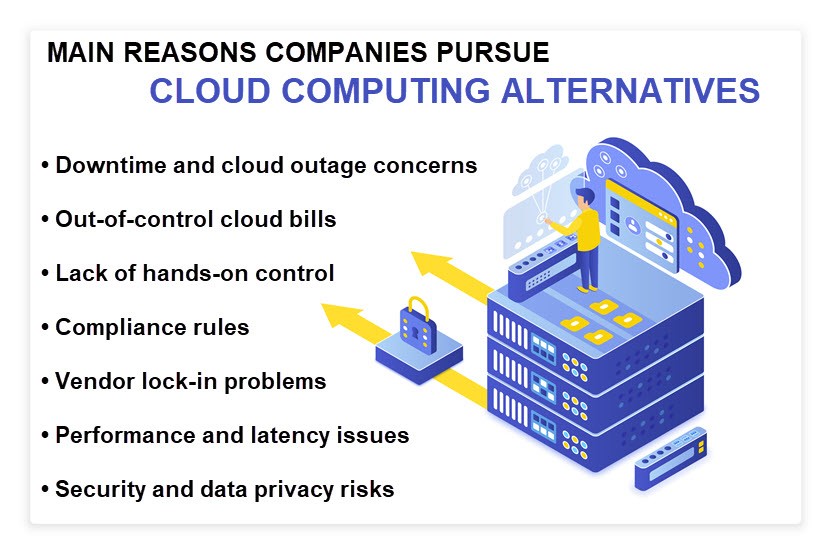

The cloud was an IT game-changer, but not every use case is a good fit with what cloud computing has to offer. Some workloads and apps do not work well (or at all) with remotely-hosted virtual resources, which is why more and more organizations are exploring alternatives to cloud computing.

This article presents six cloud computing alternatives you should consider if the cloud is a financial or technical burden for your organization. Read on to see what technologies and strategies can "jump in" if a cloud-based system is not meeting expectations.

Check out our article on the advantages and disadvantages of cloud computing to get a clear picture of everything that comes with using this technology.

Cloud Computing Alternatives

Before you start considering alternatives to cloud computing, you need to know that there are different types of clouds, each intended for specific use cases. These models are:

- Public cloud (on-demand computing services managed by a third-party provider and delivered over the Internet or a private network).

- Private cloud (the same principle as the public cloud, but the owner company manages the underlying infrastructure and only in-house staff has access to resources).

- Hybrid cloud (combined use of a public and private cloud, plus on-site IT in some architectures).

- Multi-cloud (relying on multiple public cloud providers instead of a single vendor).

- Community cloud (a public cloud open only to a select user base with shared interests, such as the same cybersecurity risks or compliance rules).

- Distributed cloud (a single public cloud spread across multiple geographic locations).

Check out our cloud deployment models article for an in-depth look at each cloud type and its most appropriate uses. If you're still not finding the right fit, it's time to start looking at alternatives to cloud computing.

1. Fog Computing

Fog computing (also known as fogging) is decentralized infrastructure that performs a portion of computing somewhere between the data source and the origin server (or the cloud). Here's how a fog-based environment processes data:

- Multiple endpoints (such as a sensor or an IoT device) collect data.

- Endpoints send raw data to a nearby fog node (a gateway, router, or bridge with computing and storage capabilities) responsible for the local subset of endpoints.

- The fog node (which operates at the local area network (LAN) level) performs data processing and returns instructions to endpoints, ideally in or near real-time.

- The fog device contacts the origin server or the cloud whenever there's a need for more resource-intensive processing or if there's an error that requires human attention. Otherwise, data exchanges occur only between the fog and endpoint layer.

Fog computing reduces bandwidth needs by sending less data to the cloud and performing short-term analytics at specific network access points. This strategy also lowers IT costs and network latency, and it's an excellent fit for:

- Mission-critical apps with little to no tolerance for communication delays.

- Large-scale, geographically distributed apps.

- Systems that oversee thousands of IoT devices and sensors.

The most notable benefits of fog computing are:

- A reduced volume of data goes to the cloud or origin server.

- Faster responses since the bulk of data processing occurs near the data source.

- Quicker alerts and remediation in case of an IT failure.

Fog computing also boosts security as endpoints sending raw data to the cloud lead to privacy concerns. Fogging ensures less data exposure and a smaller attack surface for data breaches. However, a fogged system is a go-to target for some cyber attacks, such as IP address spoofing, DDoS, and man-in-the-middle attacks.

2. Edge Computing

Edge computing is a distributed IT architecture in which data processing happens at or as close to the source of data as possible. This tech reduces latency and bandwidth by processing data at local devices, such as an IoT device or an edge server.

There's an overlap between fog and edge computing (both are techs that bring computing closer to endpoints), but the two differ in terms of where they process data:

- Edge computing places compute resources at the device level (e.g., a small server and some storage atop a wind turbine to collect and process data produced by sensors).

- Fog computing places compute resources at the LAN level and relies on fog nodes responsible for numerous smart devices. Fogging is the go-to option when processing is too resource-heavy for a single edge server or when endpoints are too scattered to make edge computing possible.

An edge computing device must perform the following functions without having to contact the cloud, an origin server, or a fog node:

- Collect data from endpoints.

- Process raw info.

- Share timely insights with hierarchically higher systems.

- Assess which events and processed data require human attention.

- Take appropriate action and instruct edge devices.

Edge computing offers many benefits to the right use case, including:

- Real-time response times (even if there is no 24×7 internet access).

- More optimal bandwidth usage.

- Less chance of data congestion.

- Reduction in single points of failure (since each device operates independently).

- Enhanced privacy and data security (meeting regulatory and compliance requirements also becomes easier).

- Reduced operational costs.

By 2025, 75% of all data processing will occur outside the traditional data center. Get an early start on this trend with phoenixNAP's edge solutions and boost the performance of both on-site and cloud-based apps.

You can also learn more about this technology by reading our articles on common edge computing challenges and an in-depth comparison of edge and cloud computing.

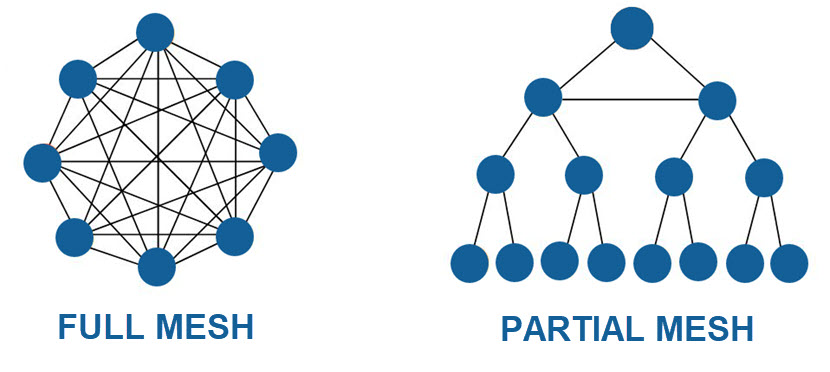

3. Mesh Computing

A mesh network (or meshnet) is an architecture in which the infrastructure nodes (bridges, switches, etc.) connect directly and non-hierarchically to as many other nodes as possible. Since there are at least two pathways to each node, there are numerous routes for data to travel, which makes meshnets a highly reliable design.

Another common name for a meshnet is a self-configuring network (since a new node automatically becomes part of the network's structure). Like most other alternatives to cloud computing, mesh computing solves the main issue of the public cloud—the fact it cannot reliably handle low-latency communications involving massive amounts of data. Meshnets have multiple benefits:

- Lightning-fast, consistent connections (peer nodes communicate directly without having to first go through the Internet).

- Reliable network coverage to a broad area (a single network can include hundreds of wireless nodes).

- No single points of failure (a single node going down does not compromise the rest of the network, so there's little to no chance of cloud outage-like scenarios).

- Increased range (a mesh easily covers "dead spots" where Wi-Fi signals do not reach).

Mesh networks are an excellent choice for the following use cases:

- Various types of high-risk monitoring (medical, industrial, etc.).

- Heavy machinery control.

- High-risk security systems.

- Electricity monitoring and microgrids.

- City-scale smart systems (i.e., smart street lighting).

There are two types of mesh network topologies:

- Full mesh (every node has a direct connection to every other node).

- Partial mesh (there are intermediate nodes that connect to each other and manage subgroups of "lesser" nodes).

Whether a company sets up a full or partial mesh depends on IT needs, traffic patterns, risk levels, and budget (full meshes are far more expensive to make).

Are high bills the main reason you are looking at cloud computing alternatives? Our article on cloud cost management tools presents 14 platforms that help keep cloud expenses in check.

4. Bare Metal Cloud (BMC)

Bare metal cloud enables a company to rent physical servers but deploy, scale, and manage them in a public cloud-like fashion. BMC servers are deployed automatically in just a couple of minutes with the requested OS or bare metal hypervisor. Server deployment and management can be performed via API and CLI, which provides automation opportunities, similar to public cloud.

Here's what sets BMC apart from regular cloud services:

- Servers provide raw hardware resources without the hypervisor layer to slow down performance (essentially the same effect as having a dedicated server, either on-site or hosted by a managed service provider (MSP)).

- Users have more control over the setup (both in terms of security configuration and component/software customization).

- BMC is a single-tenant environment, so there's no resource contention (the so-called "noisy neighbor" problem).

- Since there are no other tenants, there's no risk of incomplete isolation of execution environments and virtual networks.

- There's no added overhead from nested virtualization (for example, when containers run within a lightweight virtual machine (VM)).

Unlike traditional bare metal servers, BMC offers cloud-like agility. Instances are available on-demand, servers are highly scalable, the environment is cloud native and optimized for Kubernetes and container orchestration in general. Bare Metal Cloud provides DevOps infrastructure for teams that require scalable bare metal with plenty of automation opportunities.

Here are a few use cases that are an excellent fit with a bare metal cloud server:

- Big data apps.

- Apps with high-transaction workloads and low-latency tolerance (e.g., a fintech app with real-time market info).

- Competitive gaming servers.

- AI and machine learning systems with complex algorithmic operations and natural language processing (NLP).

- Websites for live coverage of events and conventions.

- High-performance computing (HPC).

- Real-time analytics.

Is BMC looking like a good fit for your use case? Deploy one of our Bare Metal Cloud servers for as low as $0.08 per hour and "test the waters" before going all-in on this hosting solution.

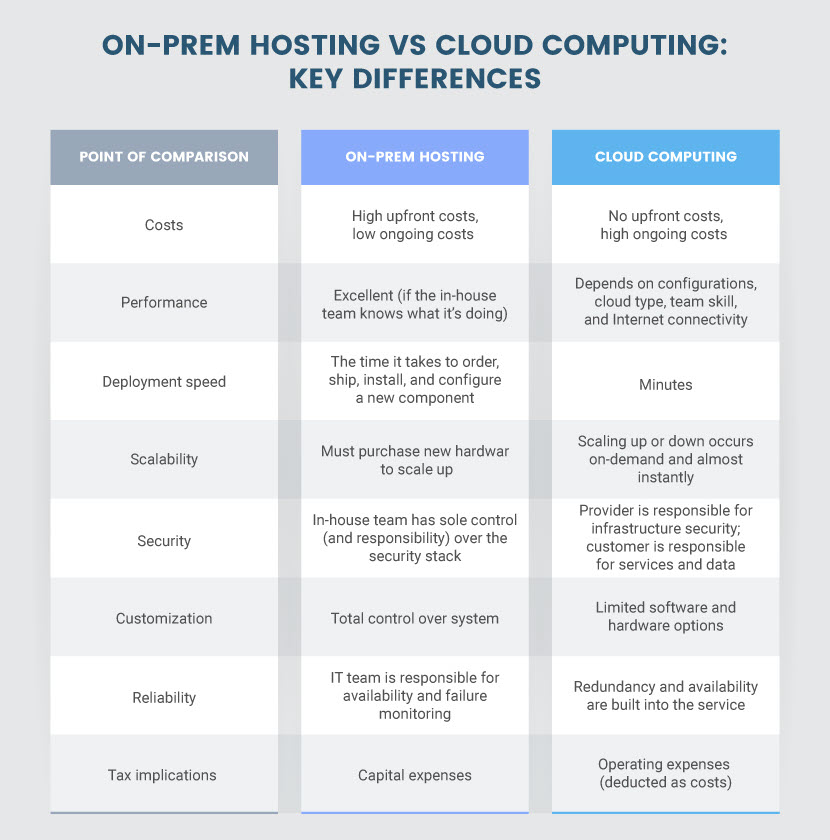

5. On-Prem Hosting

The most obvious of all alternatives to cloud computing is to opt for on-prem hosting. You set up an on-site server room and equip it with all the hardware you need to run apps and workloads in-house.

An on-prem infrastructure has several key advantages over cloud computing:

- The company owns all hardware, so there's complete control over how systems run and who has access to them.

- On-prem systems often perform better than cloud-based hosting (especially if you have predictable usage patterns). Low latency, no external dependencies, and direct control over availability all boost performance.

- Long-term costs of on-prem hosting are lower than using the cloud. Once you purchase all equipment, the only ongoing expenses are power and maintenance.

- The in-house team has complete control over security. The staff sets up any access and security controls you need, and there's no third party to cause additional risk.

- Meeting compliance requirements is easier on-prem than in the cloud (especially if you must adhere to stricter regulations like HIPAA or PCI).

Despite these advantages, there are also a few noteworthy drawbacks of running workloads and services on-prem:

- Setting up an in-house IT system requires an expensive upfront investment. You must pay for hardware, software licenses, appropriate office space, networking, and a team of capable sysadmins.

- The infrastructure is only as reliable as your team's server management skills.

- The company is solely responsible for setting up redundancy, disaster recovery, and data backups.

- There's no good option for scalability (scaling up requires new hardware investments; scaling down requires setting aside equipment you already paid for).

- The company has complete control over the physical and network security, but there's no outside help to share the burden.

If you decide to re-host cloud-based apps back on-site, you'll have to go through repatriation (pulling assets from the cloud back to on-prem storage). Our data and cloud repatriation articles offer an in-depth look at how companies plan for these processes.

6. Colocation Hosting

Colocation (or colo) is a data center service in which a third-party facility provides space for privately-owned servers and other computing hardware. Customers rent space by the rack, cabinet, cage, or room, depending on the amount of equipment that needs housing.

Every provider offers unique services, but most top-tier facilities provide the following as part of their colo package:

- Physical security of hardware.

- All the networking, bandwidth, power, and cooling required to run the equipment.

- Server monitoring.

- Redundant internet connectivity and power (typically ensured through an uninterruptible power supply (UPS)).

- On-site technical support (this feature increases the price of colo services)

- Access to a meet-me room.

Colocation services are ideal for any company that already owns hosting equipment but does not want to invest in an on-site server room. Colo services offer the following advantages when compared to the cloud:

- Leasing space in a colo facility is less expensive than paying for cloud-based resources (especially for more resource-intensive IT operations).

- Companies get to use servers and storage hardware of their own choosing.

- Customers control their IT equipment despite hosting it at a third-party facility.

- Meeting data compliance requirements is significantly easier with a colo service than with public cloud storage.

Despite the positives, there are a few disadvantages of relying on colocation. Scaling up requires new upfront purchases, and prolonged reliance on one provider may lead to vendor lock-in. Also, the distance between your office and the colo center can prolong the effects of failures and errors as your in-house team must travel to the facility to handle the issue (unless you opt for managed services).

Looking for colo services in Arizona? There's no beating our flagship colocation facility in Phoenix, the first data center in AZ to get an AWS Direct Connect.

Always Consider Alternatives to Cloud Computing Before Committing to the Cloud

While the cloud is powerful, pursuing cloud migration should not be a rushed matter. Organizations must carefully consider alternatives to cloud computing before committing to any hosting solution. Remember that's it far more costly to switch infrastructures on an already-running system, so know your options from the start and make good choices to avoid future headaches.