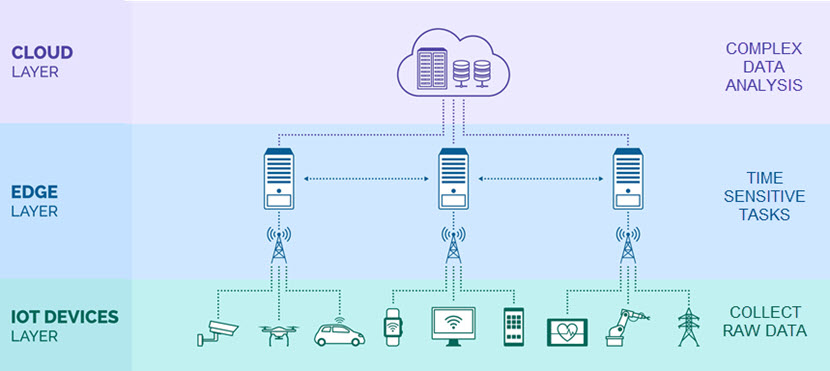

In a classic IoT architecture, smart devices send collected data to the cloud or a remote data center for analysis. High amounts of data traveling from and to a device can cause bottlenecks that make this approach ineffective in any latency-sensitive use case.

IoT edge computing solves this issue by bringing data processing closer to IoT devices. This strategy shortens the data route and enables the system to perform near-instant on-site data analysis.

This article is an intro to IoT edge computing and the benefits of taking action on data as close to its source as possible. Read on to learn why edge computing is a critical enabler for IoT use cases in which the system must capture and analyze massive amounts of data in real-time.

What Is IoT Edge Computing?

IoT edge computing is the practice of using data processing at the network's edge to speed up the performance of an IoT system. Instead of sending data to a remote server, edge computing enables a smart device to process raw IoT data at a close-by edge server.

Data processing close to or at the point of origin results in zero latency. This feature can make or break the functionality of an IoT device that runs time-sensitive tasks.

Moving data processing physically closer to IoT devices offers a line of benefits to enterprise IT, such as:

- Faster, more reliable services.

- A smoother customer experience.

- Real-time, on-site analytics.

- The ability to filter and aggregate raw data to reduce traffic sent to an external server or the cloud.

- Lower operational costs (OpEx) due to less bandwidth usage and smaller data center capacity needs.

- Higher security due to fewer external connections and less room for potential lateral movement.

IoT edge computing is a vital enabler for IoT as this strategy allows you to run a low-latency app on an IoT device reliably. Edge processing is an ideal option for any IoT use case that:

- Requires real-time decision-making.

- Has potentially catastrophic failures.

- Deals with extreme amounts of data.

- Runs in an environment where cloud connectivity is either spare or entirely unavailable.

Cloud and edge computing are not mutually exclusive. The two computing paradigms are an excellent fit as an edge server (either in the same region or on the same premises) can handle time-sensitive tasks while sending filtered data to the cloud for further, more time-consuming analysis.

Edge Devices vs IoT Devices

IoT edge computing relies on the combined use of both edge and IoT devices:

- An IoT device is a machine connected to the Internet that can generate and transmit data to the processing unit (either an edge device, the cloud, or a central server). These devices usually have special-purpose sensors and serve a single purpose.

- An edge device is a piece of hardware that operates near the user or device that generates raw data. These devices have enough computing resources to process data and make decisions with sub-millisecond latencies, a speed impossible to reach if data must first cross a network.

In some cases, the terms edge and IoT devices can be interchangeable. An IoT device can also be an edge device if it has enough compute resources to make low-latency decisions and process data. Also, an edge device can be a part of IoT if it has a sensor that generates raw data.

However, creating devices with both IoT and edge capabilities is not cost-effective. A better option is to deploy multiple cheaper IoT devices that generate data and connect all of them to a single edge server capable of processing data.

Stay a step ahead of competitors with pNAP's edge servers and ensure zero latency for IoT-driven systems regardless of where you set them up.

How Do IoT and Edge Computing Work Together?

Edge computing provides an IoT system with a local source of data processing, storage, and computing. The IoT device gathers data and sends it to the edge server. Meanwhile, the server analyzes data at the edge of the local network, enabling faster, more readily scalable data processing.

When compared to the usual design that involves sending data to a central server for analysis, an IoT edge computing system has:

- Reduced latency of communication between the IoT device and the network.

- Faster response times and increased operational efficiency.

- Smaller network bandwidth consumption as the system only streams data to the cloud for long-term storage or analysis.

- The ability to continue operating even if the system loses connection with the cloud or the central server.

Edge computing is an efficient, cost-effective way to use the Internet of Things at scale without risking network overloads. A business relying on IoT edge also lowers the impact of a potential data breach. If someone breaches an edge device, the intruder will only have access to local raw data (unlike what happens if someone hacks a central server).

The same "smaller blast radius" logic applies to accidental data leaks and similar threats to data integrity.

Additionally, edge computing offers a layer of redundancy for mission-critical IoT tasks. If a single local unit goes down, other edge servers and IoT devices can go on operating without issues. There are no single points of failure that can bring all operations down to a halt.

Deploying to the edge is on the rise across all verticals of enterprise IT. Learn what other strategies are currently making an impact in our article on cloud computing trends for 2022.

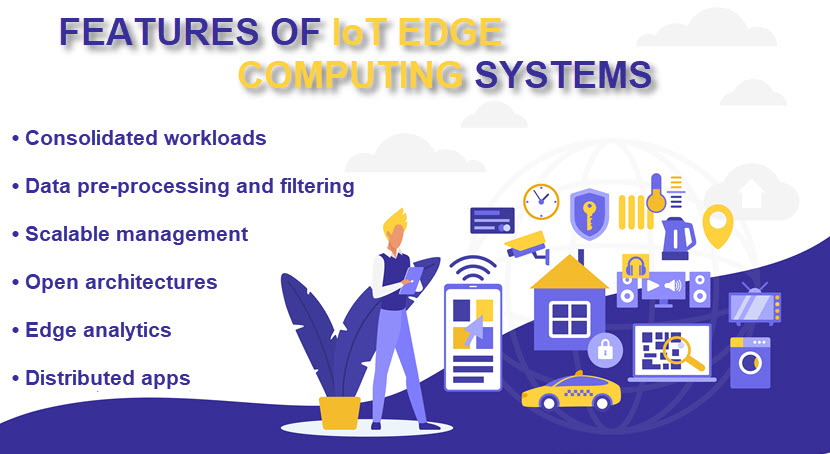

IoT Edge Computing Features

While each IoT edge computing system has unique traits, all deployments share several characteristics. Below is a list of 6 features you can find in all IoT edge computing use cases.

Consolidated Workloads

An older edge device typically runs proprietary apps on top of a proprietary RTOS (real-time operating system). A cutting-edge IoT edge system has a hypervisor that abstracts the OS and app layers from the underlying hardware.

Using a hypervisor enables a single edge computing device to run multiple OSes, which:

- Paves the way for workload consolidation.

- Reduces the physical footprint required at the edge.

As a result, the price of deploying to the edge is far lower than what you once had to pay to set up a top-tier edge computing system.

Pre-Processing and Data Filtering

Earlier edge systems typically worked by having the remote server request a value from the edge regardless of whether there were any recent changes. An IoT edge commuting system can pre-process data at the edge (usually via an edge agent) and only send the relevant info to the cloud. This approach:

- Reduces the chance of data bottlenecks.

- Improves system response rates.

- Lowers cloud storage and bandwidth costs.

PhoenixNAP's Bare Metal Cloud enables you to reduce data transfer costs with our bandwidth packages. Monthly bandwidth reservations are a cost-optimal option for high-workload use cases, such as high-traffic websites, streaming services, or IoT edge devices.

Scalable Management

Older edge resources often used serial communication protocols that were difficult to update and manage at scale. A business can now connect IoT edge computing resources to local or wide area networks (LAN or WAN), enabling central management.

Edge management platforms are also increasing in popularity as providers look to even further streamline tasks associated with large-scale edge deployments.

Open Architecture

Proprietary protocols and closed architectures were common in edge environments for years. Unfortunately, these features often lead to high integration and switching costs due to vendor lock-ins, which is why modern edge computing relies on an open architecture with:

- Standardized protocols (e.g., OPC UA, MQTT).

- Semantic data structures (e.g., Sparkplug).

Open architecture reduces integration costs and increases vendor interoperability, two critical factors for the viability of IoT edge computing.

Want to learn more about system architectures? Check out our in-depth articles on cloud computing architecture, hybrid cloud architecture, and cloud-native architecture.

Edge Analytics

Earlier versions of edge devices had limited processing power and could typically perform a single task, such as ingesting data.

Nowadays, an IoT edge computing system has more powerful processing capabilities for analyzing data at the edge. This feature is vital to low-latency and high data throughput use cases traditional edge computing could not reliably handle.

Distributed Apps

Intelligent IoT edge computing resources de-couple apps from the underlying hardware. This feature enables a flexible architecture in which an app can move between compute resources both:

- Vertically (e.g., from the edge resource to the cloud).

- Horizontally (e.g., from one edge computing resource to another).

A business can deploy an edge app in three types of architectures:

- 100% edge: This architecture has all compute resources on-premise. This design is popular with organizations that do not wish to send data off-premises, typically due to security concerns. A business that is okay with heavy on-prem investments is also a typical adopter.

- Thick edge + cloud architecture: This design includes an on-prem data center, a cloud deployment, and edge compute resources. A common choice for companies that already invested heavily in an on-prem data center but later decided to use the cloud to aggregate and analyze data (typically from multiple facilities).

- Thin (or micro) edge + cloud architecture: This approach always includes cloud compute resources connected to one (or more) smaller edge compute resource. There are no on-prem data centers in this design.

Learn how to securely connect distributed apps and see how you can make the most out of this modern approach to IT.

IoT Edge Computing Use Cases

Edge computing can play a vital role in any IoT design that requires low latency or local data storage. Here are a few interesting use cases:

- Industrial IoT (IIoT): IoT sensors can track the state of industrial machinery, identifying issues such as breakdowns or overuse. Meanwhile, an edge server can respond to problems before a potential disaster.

- Self-driving cars: An autonomous vehicle driving down the road must be able to collect and process real-time data (traffic, pedestrians, street signs, stop lights, etc.). Automatic cars are a zero-latency use case, so using edge IoT is the only way to ensure that a self-driving car can stop or turn quickly enough to avoid an accident.

- Automated truck convoys: IoT edge computing can also enable a business to create an automated truck convoy. A group of IoT-powered trucks can travel close behind one another in convoy, saving fuel costs and decreasing congestion. In that scenario, only the first truck would require a human driver.

- Visual inferencing: A high-resolution camera with an IoT edge computer can consume video streams and perform inferencing on the collected data. That equipment can detect people with high temperatures, intruders in restricted areas, safety violations, an anomaly on production lines, etc.

- Remote condition-based monitoring: In a scenario where a failure can be catastrophic (e.g., an oil or gas pipeline), using IoT edge to monitor the system is a no-brainer. An IoT sensor can track an asset's condition (e.g., temperature, pressure, stress, etc.), and the edge server can recognize and respond to a potential issue in milliseconds.

Edge computing is still a relatively novel concept, so it is natural that some companies are having a hard time deploying the technology. Our article on edge computing challenges explains the most common roadblocks and, more importantly, how to overcome them.

IoT Edge Computing: A Game-Changer for Enterprise IT

Today, the IoT sector operates across numerous scenarios without edge computing. However, as the number of connected devices grows and businesses explore new use cases, the ability to retrieve and process data faster will become a decisive factor. Expect IoT edge computing to play a pivotal role in years to come as more and more companies start pursuing the benefits of zero-latency data processing.