Introduction

Docker is a popular tool for deploying and running containerized applications. It is known for its reliability, resource efficiency, and scalability, making it a frequent choice for development teams.

In this article, you will learn what Docker is, its essential components, and the pros and cons of using the platform.

Introduction to Containerization

Containerization is a development process that involves shipping an application and the dependencies necessary for its operation in an executable unit called the container. The concept of a container is explained in detail in the following sections.

What Are Containers?

Containers are lightweight virtualized runtime environments for running applications. A container represents a software package with code, system tools, runtime, libraries, dependencies, and configuration files required for running a specific application. Each container is independent and isolated from the host and other containers.

A template containing instructions and data necessary to build the container is called an image. Images are immutable (i.e., they cannot be changed), so any modification in the structure and content requires creating a new Docker image.

Containers vs. Virtual Machines

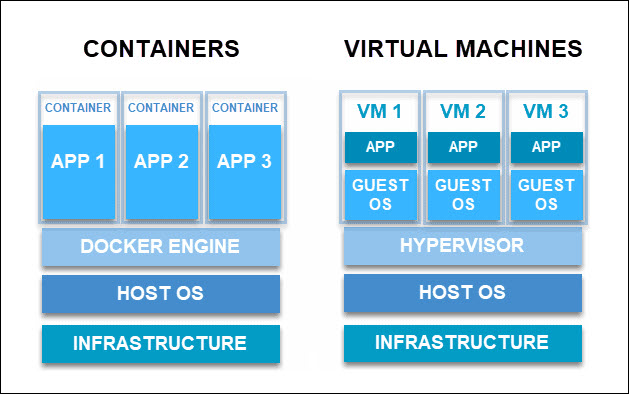

Containers perform virtualization at the application level. It means they share the operating system kernel with the host and virtualize on top of it.

Virtual machines are entirely independent of the host OS because they sit atop a hypervisor that isolates them. Each VM requires its own operating system, which can differ from the host's.

The diagram below illustrates the difference between containers and virtual machines.

Virtual machines often consume a lot of host system resources. A VM running a small web app still needs to run the entire OS in the background.

Containers are lightweight, fast, and simple to configure. They allow developers to work on the same application in different environments without affecting performance.

Note: To maximize container performance, implement Docker container management best practices.

Why Use Containers?

Containers bring numerous benefits to the software development process. Integrating containers into a development workflow allows developers to:

- Run multiple workloads on fewer resources.

- Isolate and segregate applications.

- Standardize environments to ensure consistency across development and release cycles.

- Streamline the software development lifecycle (SDLC) and support other CI/CD workflows.

- Develop highly portable workloads that can run on multi-cloud platforms.

- Introduce an effective version control system for an application.

What Is Docker?

Docker is an open-source containerization platform for developing, deploying, and managing container applications. The project started as a Platform as a Service solution called DotCloud. However, many developers showed great interest in the underlying technology, software containers, which soon became the platform's focus.

Developer teams mainly use Docker to create distributed applications that work efficiently in different environments. By making the software system agnostic, Docker eliminates compatibility issues and allows the creation of cross-platform apps.

How Does Docker Work?

Docker is a client-server platform, i.e., it enables multiple clients to control deployments on a single server. The following sections explain the essential elements of Docker's architecture, introduce Docker objects and list tools commonly used with the platform.

Docker Architecture

The main element of Docker architecture is the Docker Engine (DE). It comprises a lightweight runtime system and the underlying client-server technology that creates and manages containers.

Docker Engine consists of three components:

- Server. Docker's server is a daemon process (dockerd) responsible for creating and managing containers.

- Rest API. Docker uses REST API to establish communication with the clients that instruct dockerd what to do.

- Command-Line Interface (CLI). Each Docker client communicates with the daemon by issuing Docker CLI commands.

The sections below explain some of the essential concepts in Docker architecture.

Docker Daemon

The Docker daemon is a persistent background process created by the Docker Engine. This process accepts and fulfills API requests related to building, running, and managing Docker containers and images. The daemon uses a REST API that enables users to issue Docker CLI commands or use a client library to perform container-related actions.

Docker Host

A Docker host is any system with a running Docker instance. Hosts interact with the Docker daemon to create and manage containers.

While the Docker host is commonly a single, independent machine, container orchestrators such as Docker Swarm and Kubernetes can distribute the role of a single host across multiple systems. Distributed hosts facilitate scaling and container orchestration.

Docker Client

A Docker client is a tool that enables users to interact with the Docker daemon on their system. The client features a set of Docker CLI commands that enable the creation and management of containers.

Docker Objects

Docker objects are components of a Docker deployment that help package and distribute applications. The following sections present the essential Docker objects.

Docker Container

A container is a runtime environment containing the application code alongside the dependencies (libraries and files). Docker containers are designed to comply with the Open Container Initiative (OCI) rules, making them compatible with other OCI container engines.

Docker Image

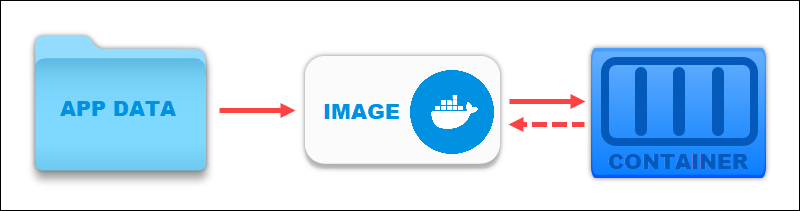

A Docker image is a template for building containers. Like virtual machine snapshots, Docker images are immutable, read-only files consisting of the source code, libraries, dependencies, tools, and other files necessary to run an application.

Docker CLI commands such as docker run and docker create use the app data in the image to create a Docker container that runs the given app.

Note: Maintaining small images is essential for producing lightweight, fast containers. Utilizing a lighter image base, avoiding unnecessary layers, and using the .dockerignore file are just a few ways of keeping your Docker images small.

Dockerfile

A Dockerfile is a script that consists of a set of instructions on how to build a Docker image. These instructions include specifying the OS, languages, Docker environment variables, file locations, network ports, and other components needed to run the image. All the commands in the file are grouped and executed automatically.

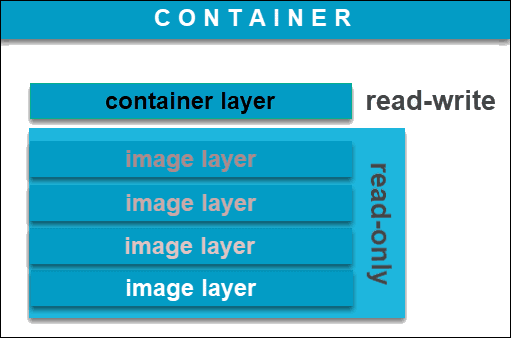

A Docker image has multiple layers. A new read-write layer (container layer) is added once a user runs an image to create a container. The additional layer accepts changes, which the user can commit to create a new Docker image for future use.

Docker Volume

Instead of adding new layers to an image, a better solution to preserve data produced by a running container is using Docker volumes. This helpful tool allows users to save data, share it between containers, and mount it to new ones.

Volumes are independent of the container life cycle as they are stored on the host.

Docker Tools

Docker features a rich ecosystem of tools to enhance its functionality and make it easier to work with many containers. Below are some of the most popular Docker tools available at the moment.

Docker Desktop

Docker Desktop is an application that allows users to quickly manage containers on Windows and macOS using a graphical user interface. It is a simple way of installing and setting up the entire Docker development environment.

It includes Docker Engine, Docker Compose, Docker CLI client, Docker Content Trust, Kubernetes, and Credential Helper.

Docker Registry

Docker Registry is a system that organizes storage and distribution of Docker images. Each registry consists of repositories that host images. Users can pull images from a repository to their local system or push the image to the repository for easier access.

Docker Hub

Docker Hub is the largest repository of container images. It supplies over 100,000 images created by open-source projects, software vendors, and the Docker community.

The platform allows users to ship their applications anywhere quickly, collaborate with teammates, and automate builds for faster integration to a development pipeline.

Like in GitHub, developers push and pull container images from Docker Hub and decide whether to keep them public or private.

Docker Swarm

Docker Swarm is a tool that allows developers to create a cluster of Docker nodes, i.e., physical machines with Docker installed, and manage it as a single system. Each swarm consists of:

- A swarm manager that controls the nodes in the swarm and enables the orchestration and scheduling of containers.

- Worker nodes that receive and execute tasks from the swarm manager.

Docker Compose

Docker Compose is a tool that simplifies running and managing multiple containers simultaneously. It strings multiple containers and controls them through a single coordinated command.

With Docker Compose, users can launch, execute, manage, and close containers with one command. These actions are performed using a YAML file to configure the application's services.

Note: For instructions on how to install Docker Compose, refer to the tutorials below:

Supporting Services

Many third-party solutions integrate Docker support in their service offer. Below is the list of the popular services that allow users to utilize the power of Docker containers:

- Amazon ECS. Amazon Elastic Container Service is a recommended way to run Docker on AWS. It allows users to deploy containerized apps directly to the Amazon cloud.

- AKS. Azure Kubernetes Service supports setting up and testing multi-container applications using Docker and Docker Compose.

- GKE. Google Kubernetes Engine uses Docker to create images and run containers in a GKE cluster.

- Portainer. Portainer is a full-featured container management GUI for Docker. While Docker Desktop can graphically manage deployed containers, creating new deployments still requires the Docker CLI.

- Kubernetes. This container orchestrator delegates container management workloads to Docker or similar container engines. Kubernetes administrators can use Docker to pack and ship applications and Kubernetes to deploy and scale them.

Note: For more information on Docker integrations and third party solutions, check out the following posts:

What Is Docker Used For?

Thanks to its flexible nature, Docker has various use cases. Below are some example ways in which application developers use Docker:

- Deploying microservices. Docker supports the microservice approach to app development by offering a simple way to connect components using its built-in container networking features.

- Software testing. Docker containers provide a quick way to start and stop the applications and allow quick iteration between different app versions.

- Deploying database containers. Running a database container alongside an app container simplifies database management. It ensures the app can create and use the database even if the relevant DBMS is not installed on the host system.

- Accessing tools without having to install them. App development often requires tools that are used only once in the process. Using a containerized version of a tool provides easy access to its functionality without installing it.

- Creating easily deployable app stacks. Docker Compose allows users to create a single file to deploy a set of containerized apps. This feature is particularly useful in development, where tool stacks are extensively used.

Docker Benefits

The benefits of Docker range from providing consistent and automated environments to conserving resources. Below is the list of the advantages of development with Docker.

- Consistency. Docker ensures that your app runs the same across multiple environments. Developers on different machines and operating systems can work together on the same application without encountering platform-related issues.

- Automation. The platform allows you to automate tedious, repetitive tasks and schedule jobs automatically.

- Faster deployments. Since containers virtualize the OS, there is no boot time when starting container instances. Therefore, users can perform deployments in seconds. Additionally, existing containers can be shared to create new applications.

- CI/CD support. Docker works well with CI/CD practices as it speeds up deployments, simplifies updates, and allows teammates to work efficiently together.

- Rollbacks and image version control. A container is based on a Docker image. Images can have multiple layers, each representing changes and updates on the base. This feature speeds up the build process and provides version control over the container, allowing developers to roll back to a previous version.

- Modularity. Containers are independent and isolated virtual environments. In a multi-container application, each container has a specific function. By segregating the app, developers can work on a particular part without taking down the entire app.

- Resource and cost-efficiency. Containers do not include guest operating systems, so they are much lighter and smaller than VMs. They use less memory and reuse components thanks to data volumes and images.

Docker Challenges

Although it has many positive sides, using Docker also comes with a set of drawbacks that are worth considering. The biggest challenges include:

- The lack of support for GUI apps. Docker is not the best choice for running apps that require a graphical interface. It is mainly for applications that run on the command line or use a web UI.

- Security issues. Although Docker provides security by isolating containers from the host and each other, there are certain Docker-specific security risks. Many potential security issues may arise while working with containers, so it is wise to adopt the best Docker security practices that can help prevent attacks and privilege breaches.

- A steep learning curve. Even developers experienced in working with the VM infrastructure need some time to understand Docker concepts. If switching to Docker, take into account the necessary learning curve.

Docker FAQ

The following section contains frequently asked questions regarding Docker's design and usage.

Is Docker a Virtual Machine?

Both Docker containers and virtual machines are virtualization platforms, but they differ in their approach to virtualization. Unlike Virtual Machines, Docker uses the host OS's kernel and libraries to provide an environment for containerized apps. This property makes containers less isolated than VMs but allows them to be faster and consume fewer resources.

Is Docker a Framework or a Language?

Docker is neither a framework nor a language. It is a virtualization platform that provides a convenient way to distribute applications.

What Problem Does Docker Solve?

Docker solves multiple problems related to software development and deployment. It helps developers overcome compatibility issues when creating a cross-platform app, facilitates portability and scalability, and improves monitoring.

Note: Check out our in-depth guide on the best tools for Docker container monitoring.

Is Docker's Root-Based Approach Insecure?

The fact that Docker containers run as root by default can present a potential security problem. However, users can minimize the effect of this vulnerability by following security best practices, such as specifying non-root users, dropping unnecessary container privileges, utilizing AppArmor and SELinux, etc.

Note: Getting started with Docker? Check out our installation guides:

Conclusion

In this article, you learned about Docker and its usefulness in software development. After learning about Docker's benefits and challenges, you can decide whether to start using it.

If you are deciding what container engine to use, read our overview of ten Docker alternatives.