Introduction

Kubernetes provides a flexible and robust automation framework for managing, deploying, and scaling containerized applications. Automating infrastructure-related processes helps developers free up time to focus on coding.

This article will delve deeper into what Kubernetes is, how it works, and what makes it an indispensable tool for managing and deploying containers.

What is Kubernetes?

Kubernetes is a container orchestration platform that provides a unified API interface for the automated management of complex systems spread across multiple servers and platforms. When deploying an app, the user provides Kubernetes with information about the application and the system's desired state. Kubernetes uses API to coordinate application deployment and scaling across the connected virtual and physical machines.

Although container orchestration is its primary role, Kubernetes performs a broader set of related control processes. For example, it continually monitors the system and makes or requests changes necessary to maintain the desired state of the system components.

What is Kubernetes Used for?

Kubernetes automates and manages cloud-native containerized applications. It orchestrates the deployment of application containers and prevents downtime in a production environment.

Containers are fragile constructs prone to failures that cause applications to malfunction. While users can replace the failed container independently, manual maintenance is time-consuming and, in larger deployments, often impossible.

To address this issue, Kubernetes takes over container management by deploying new containers, monitoring, and restarting failed container pods. These actions do not require the user to interact with the system.

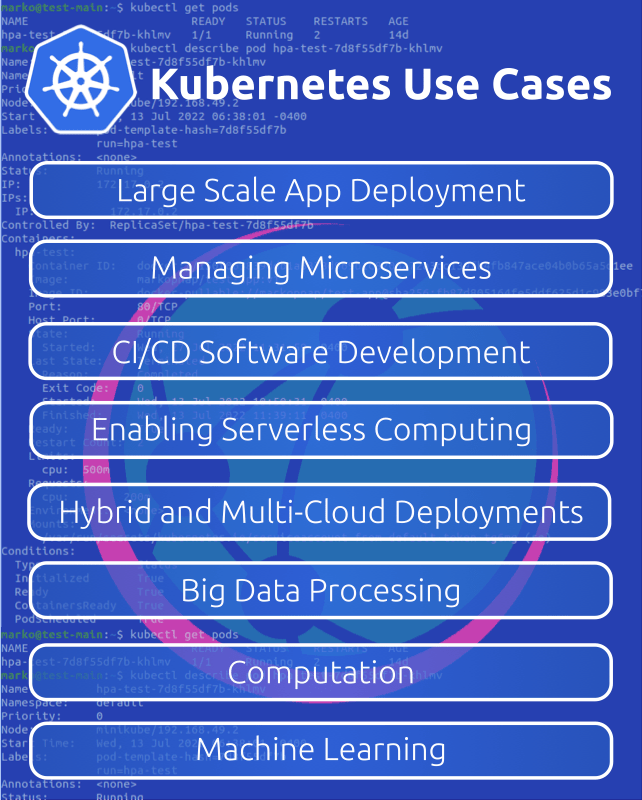

Kubernetes Real-Life Use Cases

Kubernetes is used in various scenarios across the IT industry, business, and science. Use cases for the platform range from assisting large-scale app deployments and coordinating microservices to big data processing and machine learning applications.

Note: Refer to our article on Kubernetes use cases for an in-depth analysis of how Kubernetes is implemented in each scenario.

Below are some examples of companies utilizing Kubernetes in their workflows:

- OpenAI uses Kubernetes support for hybrid deployments to quickly transfer research experiments between the cloud infrastructure and its data center. This enables the company to reduce costs for idle nodes.

- Spotify uses Kubernetes to scale its services which can receive up to ten million requests per second. Kubernetes' autoscaling features save the time and resources necessary to set up and maintain a service.

- IBM's image trust service, Portieris, runs on Kubernetes to provide IBM Cloud Container Registry image signing capabilities.

- Adidas runs its e-commerce platform and other mission-critical systems on Kubernetes.

- Pinterest uses Kubernetes to manage its multi-layered infrastructure and over a thousand microservices that make up the application.

Note: Read our in-depth article on Kubernetes monitoring tools.

What is the Main Benefit of Kubernetes?

Kubernetes offers various benefits depending on the use case and the project type. However, the main advantage of this orchestration platform lies in how it handles resources.

As an automation tool, Kubernetes excels in resource conservation. It constantly monitors the cluster and chooses where to launch containers based on the resources the nodes consume at that point. Kubernetes can deploy multi-container applications, ensure all the containers are synchronized and communicating, and offer insights into the application's health.

With a tool like Kubernetes, scaling applications became more convenient. Organizations can quickly adapt to surges and ebbs in traffic by adding or removing containers depending on momentary workloads.

Note: Learn more about the reasons to use Kubernetes by reading our article When to Use Kubernetes.

How Does Kubernetes Work?

Kubernetes uses the unstable nature of a container and turns that weakness into an asset. It needs only a general framework of what a cluster should look like. This framework is provided as a YAML manifest file passed to Kubernetes using a command-line interface tool.

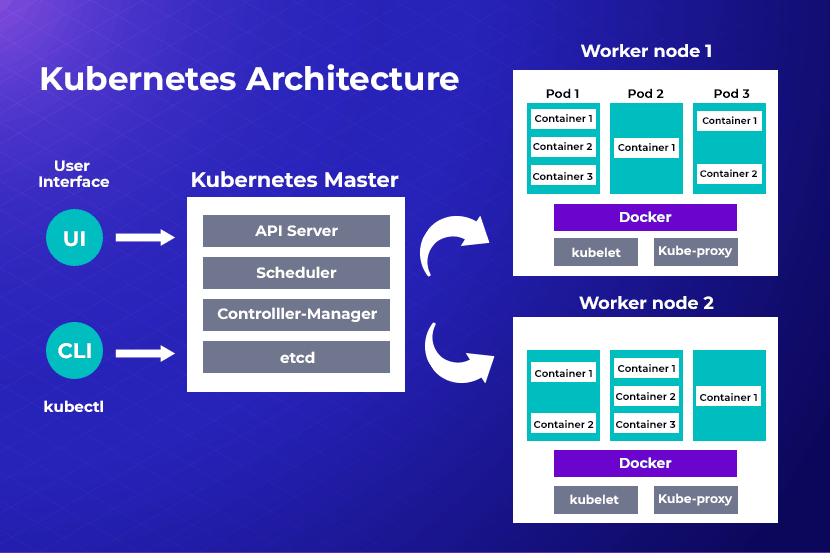

The sections below provide more detail about the essential components of Kubernetes architecture.

Note: See Understanding Kubernetes Architecture with Diagrams, where we break down Kubernetes architecture and examine its core components.

Master Node

A Node is a physical machine or VM making up a Kubernetes cluster. The master node is the container orchestration layer of a cluster responsible for establishing and maintaining communication within the cluster and for load balancing. It administers available worker nodes and assigns them individual tasks.

The master node consists of the following components:

- API Server. Used for communication with all the components within the cluster.

- Key-Value Store (etcd). A lightweight distributed store used to accumulate all cluster data.

- Controller. Uses the API Server to monitor the cluster state and adjusts its actual state to match the desired state from a manifest file.

- Scheduler. Used for scheduling newly created pods onto worker nodes by selecting nodes with the least traffic to balance the workload.

Worker Nodes

Worker Nodes perform tasks assigned to them by the master node. These are the nodes where the containerized workloads and storage volumes are deployed.

A typical worker node consists of the following:

- Kubelet. A daemon that runs on each node and responds to the master's requests to create, destroy, and monitor pods on that machine.

- Container runtime. A component that retrieves images from a container image registry and starts and stops containers.

- Kube proxy. A network proxy that maintains network communication to pods from within the cluster or an outside location.

- Add-ons. Users can add features to the cluster to extend certain functionalities.

- Pods. As the smallest scheduling element in Kubernetes, a pod represents a "wrapper" for the container with the application code. If you need to scale your app within a Kubernetes cluster, you can only do so by adding or removing pods. A node can host multiple pods.

Cluster Management Elements

Kubernetes has several instruments that users or internal components utilize to identify, manage, and manipulate objects within the Kubernetes cluster.

Kubectl

The default Kubernetes command-line interface is called kubectl. The interface allows users to directly manage cluster resources and provide instructions to the Kubernetes API server. The API server then automatically adds and removes containers in your cluster to ensure that the defined desired state and the actual state of the cluster always match.

Labels

Labels are simple key/value pairs that can be assigned to pods. With labels, pods are easier to identify and control. The labels group and organize the pods in a user-defined subset. Grouping pods and giving them meaningful identifiers improves a user's control over a cluster.

Annotations

Annotations are key/value pairs that store information not meant to be used by Kubernetes’ internal resources. They could contain administrator contact information, general image or build info, specific data locations, or tips for logging. With annotations, this helpful information no longer needs to be stored on external resources, which boosts performance.

Namespaces

Namespaces keep a group of resources separate. Each name within a namespace must be unique to stop name collision issues. There are no such limitations when using the same name in different namespaces. This feature allows you to keep detached instances of the same object, with the same name, in a distributed environment.

Note: Read our Kubernetes Objects Guide for a detailed overview and examples of Kubernetes objects.

Replication Controllers

When deploying microservices, multiple instances of any given service must be deployed and run simultaneously. Replication controllers manage the number of replicas for any pod instance. Combining replication controllers with user-defined labels allows you to easily manage the number of pods in a cluster using the appropriate label.

Deployments

A deployment is a mechanism that lays out a template that ensures pods are up and running, updated, or rolled back as defined by the user. A deployment may exceed a single pod and spread across multiple pods.

Services

Kubernetes services improve pod networking by assigning pods with fixed IP addresses, ports, and DNS names. A service provides pods with stable networking endpoints so that they can be added or removed without changing the basic network information.

Kubernetes vs. Docker Explained

Although Kubernetes and Docker both work with containers, their roles in the container ecosystem are distinct.

Docker is a platform for containerized application deployment that serves as a container runtime for creating and administrating containers on a single system. Although tools like Docker Swarm enable Docker to orchestrate containers across several systems, this functionality is separate from the core Docker offering.

On the other hand, Kubernetes manages a node cluster where each node runs a container runtime. This means that Kubernetes is a higher-level platform in the container ecosystem.

Note: Learn more about the differences between Kubernetes and Docker in our Kubernetes vs. Docker article.

Is Kubernetes Replacing Docker?

Kubernetes is not a Docker alternative. In fact, Kubernetes' design requires the existence of a container runtime, which Docker can provide. When working together with Kubernetes, Docker provides a way to create images and the corresponding containers that Kubernetes can use to run an application in a cluster.

Note: If you are looking for a solution for efficiently managing containerized apps, learn how to set up a Kubernetes cluster with Rancher.

Conclusion

After reading this article, you should have a good understanding of container orchestration and how Kubernetes works. If you want to compare Kubernetes with other tools to choose what is best for you, read our article Terraform vs. Kubernetes.

For more tutorials on Kubernetes, visit our Kubernetes networking guide.