When developing in a microservice architecture, there are many individual components the DevOps team needs to configure. For microservices to work together, they require established communication channels for information sharing and data transfer.

One way to manage and monitor communication between services is using a service mesh.

What is a Service Mesh?

A service mesh is a dedicated infrastructure layer added to the microservice architecture. Its main role is to ensure fast and secure service-to-service communication. This low-latency tool manages and monitors interservice communication and data sharing.

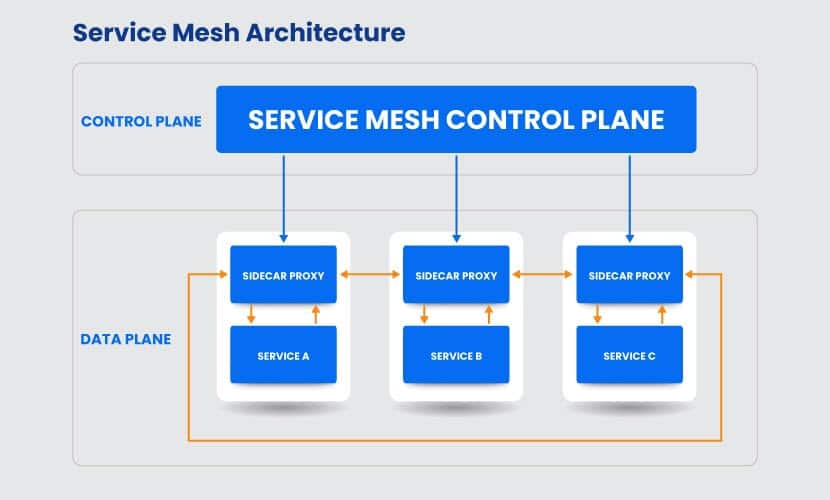

It consists of a control plane from which developers implement specific rules and policies to the networking layer. The control plane works directly with the data plane, a collection of sidecar proxies running alongside the services. These proxies are responsible for all communication between the service. Additionally, they monitor and provide valuable insight into the overall application performance and help find potential issues and avoid downtime.

The proxies provide valuable capabilities, including:

- Dynamic service discovery

- Load balancing

- Health checks

- Encryption

- Observability

- Circuit breakers

- Staged rollouts with percentage-based traffic split

- Authentication

- Rich metrics

- Authorization

The Rise of Service Mesh Architecture

To fully understand how a service mesh works, we need to look into why such a tool was developed. The rise of service mesh architecture emerged as a solution to a number of problems relating to microservices.

Many development teams made the switch from developing monolithic applications to microservice architecture. This divided an application from a monolithic unit to a system of individual services working together. The application consists of multiple autonomous services with their individual functionalities.

The difficulty with developing such an application is configuring the best way for these services to talk to each other. The application performance depends on the services working together and sharing data to provide the best user experience. For example, a web store could consist of a login service that communicates with a buy service that requires information from an inventory database and so on.

As microservices communicate through APIs, finding the best solution to solve discovery and routing was important. Additionally, developers needed to ensure the communication within the system was secure. While firewalls protected the application from outside attacks, there was a flat, open network within the microservice architecture.

Before mesh service architecture, this task was handled by load balancers. However, it wasn’t a practical solution, especially on a larger scale, due to deployment and cost issues. The service mesh was developed as an ultimate solution for all the issues mentioned above. It presented a networking layer with a centralized registry (control plane) that managed all the services with its sidecar proxies. They are much easier to configure and scale compared to load balancers. Developers can scale the proxies up and down as needed and change routing rules without modifying the services.

Service Mesh Benefits

The most significant benefits of service mesh are:

- Improved security – Implementing a service mesh into a microservice architecture improves the overall security. This network layer introduces automatic authorization, authentication, encryption, and policy enforcement. It uses mutual Transport Layer Security (mTLS) to ensure all service-to-service communication is secure. Services identify themselves with their TLS certificates to establish a connection. Once they validate their identity, they establish an encrypted channel for data sharing.

- Traffic control and visibility – One of a service mesh’s primary functions is to provide traffic control. It manages east-west traffic, as well as the traffic between services. All communication is done through the sidecar proxies, which are administered by the control plane. Traffic is transparently routed, giving developers better visibility of all data exchange. With clearer insight into the application performance, it is easier to spot potential issues within the system.

- Observability – As the platform manages all traffic, it collects valuable insight on traffic behavior and user interaction. With such large amounts of data, business analysts can plan out a strategy for improving the application.

- Developers focusing on app development instead of networking – Instead of the development team setting up and managing the entire networking layer, they can implement service mesh into the architecture. While the platform does require initial configuration, it automates time consuming tasks related to network management. This allows developers to focus on microservices. Leaving the service mesh to manage service-to-service interactions saves time and money while also improving productivity.

- Resiliency – A service mesh provides features that help create resilient microservice applications. One such feature is the circuit breaker pattern which helps detect failures and latency spikes. Additionally, a service mesh uses load balancing for service discovery and routing traffic across the microservice network. Other features that add to its resiliency include retries, timeouts, and deadlines.

- Faster testing and deployment cycles – Using sidecar proxies for pod-to-pod communication is much simpler and faster to set up. Instead of coding redundant functions into each service, they deploy proxies and connect them to the service. This speeds up the overall development and testing cycles.

List of Open Source Service Meshes

There are a number of open source service meshes available. Istio, Linkerd, and Consul are most widely used.

Below you will find more details about each platform.

Istio

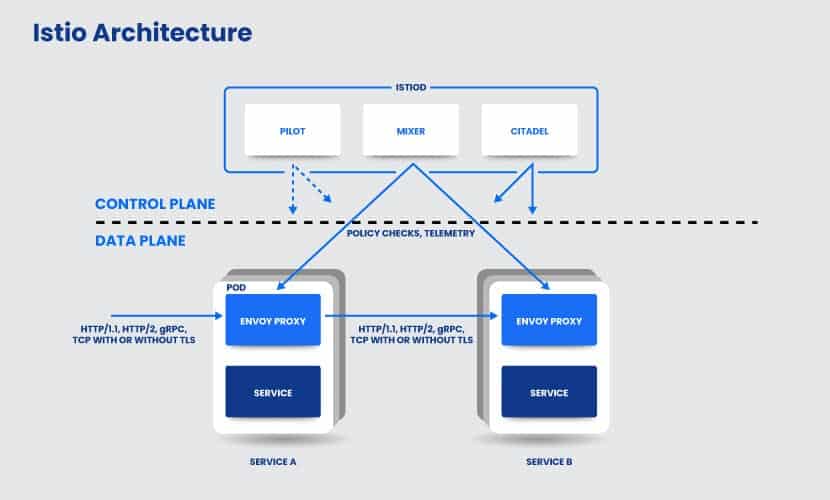

Istio is an open source infrastructure designed by Google, IBM, and Lyft. It is one of the earliest service mesh platforms. It uses Istiod, a control plane consisting of multiple components (Pilot, Citadel, and Mixer) for operating and configuring the service mesh. The data plane is made up of Envoy proxies, developed in C++. HTTP/TCP connections are managed by the Gateway, Istio’s load balancer. The VirtualService and DestinationRules define routing rules and policies.

To learn more about the most popular open source service mesh solution, refer to What Is Istio? – Architecture, Features, Benefits And Challenges

Linkerd

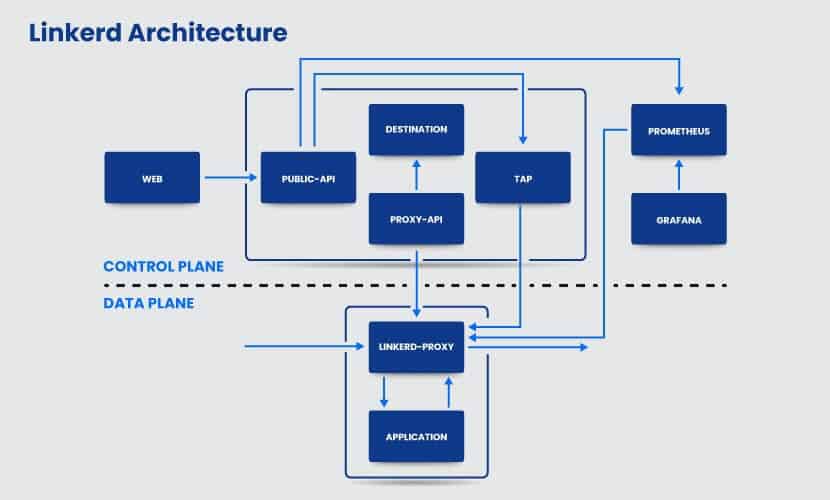

Linkerd is a lightweight open source service mesh, developed by the Cloud Native Computing Foundation (CNCF) project. It is simple to install in a Kubernetes cluster on top of any platform, without additional configuration. Having no configuration is beneficial when working with smaller clusters. However, large-scaled projects require the possibility of configuration to manage all the services in the cluster successfully.

Its sidecar proxies are written in Rust. Linkerd uses the Controller for the control plane and the Web Deployment for the dashboard. Additionally, it utilized Prometheus for exploring and storing metrics and the Grafana for rendering and displaying dashboards.

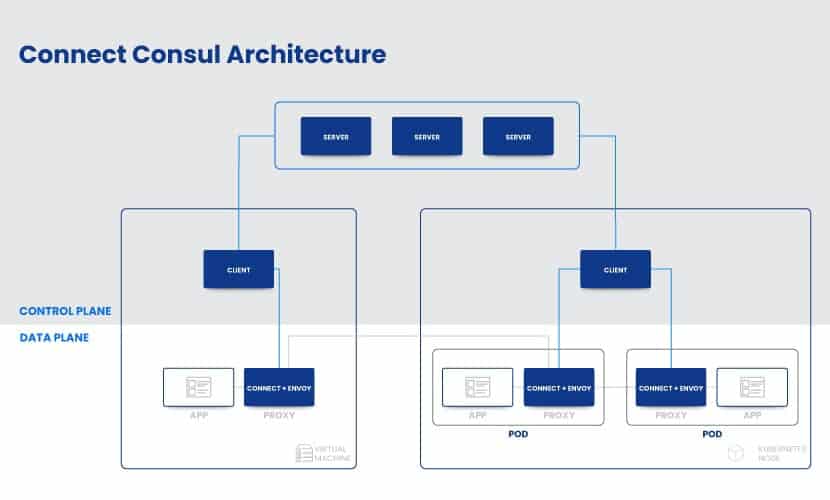

Consul Connect

Consul was first designed as a service discovery tool and later evolved into a service mesh. Unlike Linkerd, Consul Connect includes a lot of configuration, giving you more control over the network environment. Accordingly, it is harder to use than Linkerd. This open source service mesh is platform agnostic, meaning it doesn’t require Kubernetes or Nomad. Still, Nomad does simplify the process of managing microservice communication through Consul.

Microsoft’s Open Service Mesh

Open Service Mesh (OSM) is a CNCF Envoy project that implements a service mesh interface to manage and secure dynamic microservice applications. It uses Envoy xDS as sidecar containers. Its main features include traffic shifting, certificate management, enabling mTLS, automatic sidecar injection, and access control policies.

Kuma

Kuma is an open source control plane for Kubernetes and VMs running within a service mesh. It has L4+L7 policy architecture that implements features such as service discovery, routing, zero-trust security and observability. The platform is highly scalable and easy to set up.

OpenShift Service Mesh Red Hat

Red Hat developed its OpenShift Service Mesh for managing microservice applications. It provides multiple interfaces for networking and increased security with Security Context Constraint. It measures performance with Jager and observes interactions between services with Kiali. Additionally, OpenShift includes the Red Hat 3scale Istio Mixer Adapter for better API security.

AWS App Mesh

Amazon developed App Mesh, a free service mesh software that manages all your services within a microservice architecture. It provides high-availability and end-to-end visibility for your microservice application. AWS App Mesh integrates with AWS Fargate, Amazon ECS, Amazon EC2, Amazon EKS, Kubernetes, and AWS Outposts. With its monitoring tools, it automatically exports monitoring data.

Refer to our article on ECS vs Kubernetes to learn how they stack up against each other.

Network Service Mesh

Network Service Mesh is an open source CNCF Sandbox project. It is a hybrid/multi-cloud IP service mesh that uses a simple set of APIs to facilitate connectivity. It establishes a connection between services running inside containers and with external endpoints. When implemented, it requires minimal changes to Kubernetes.

In Conclusion

A service mesh simplifies managing and maintaining an application developed using the microservice architecture. It improves the overall security within the microservice architecture and ensures visibility and observability of traffic flow. Overall, the service mesh features enhance performance and speed up the development cycle. With a wide variety of open source solutions available, find one that suits your applications’ needs best.